You're staring at a webpage full of data you need. Prices, names, descriptions, links. The information is right there, but it's trapped in HTML structure. CSS selectors are how you tell your scraper exactly which elements to grab.

This cheat sheet covers every CSS selector pattern you'll need for web scraping. Bookmark it. You'll reference it constantly when building extraction rules, debugging selectors that stopped working, or targeting elements that seem unreachable.

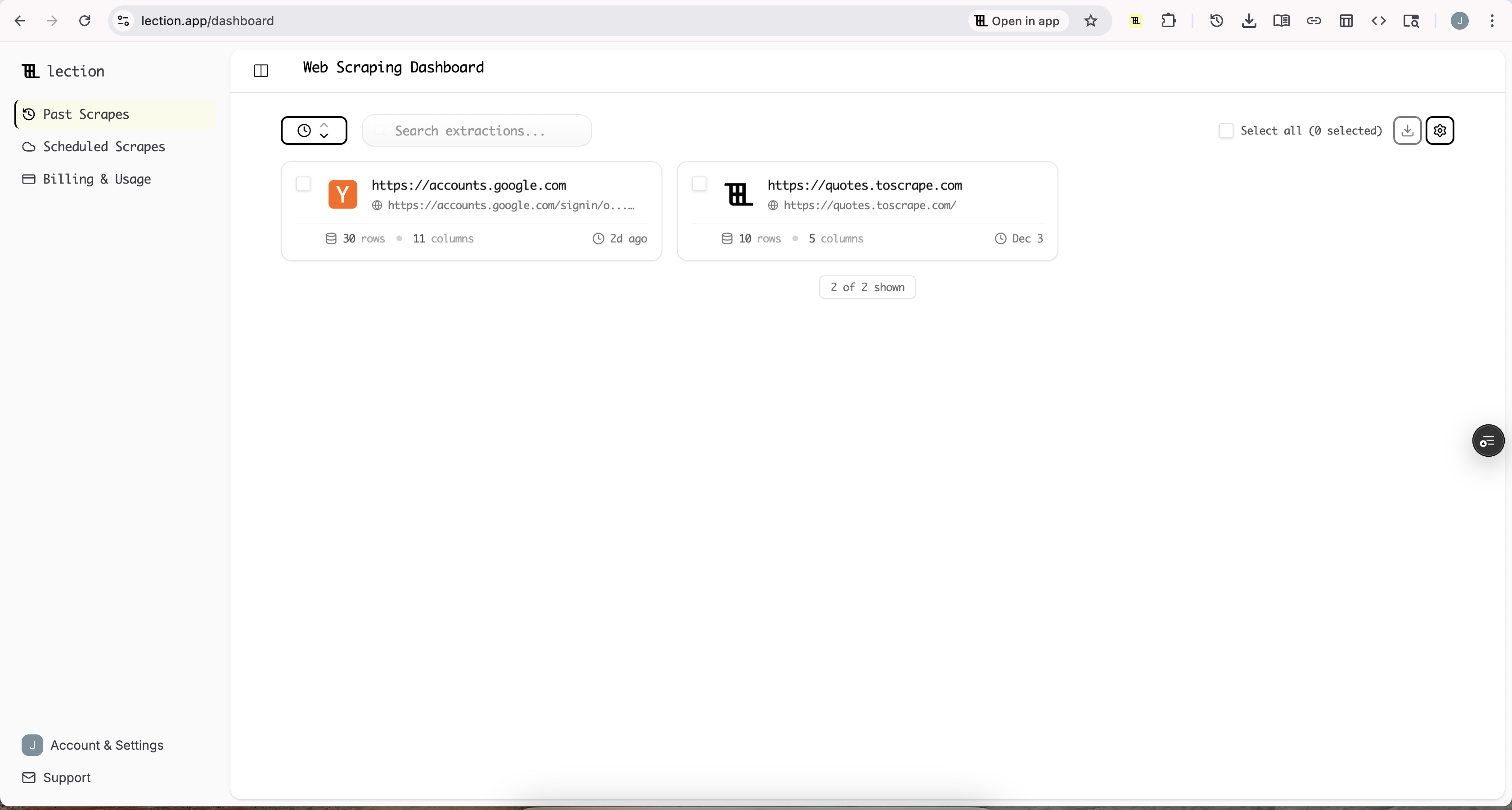

Whether you're using browser developer tools, writing custom scripts, or configuring no-code tools like Lection, understanding CSS selectors is fundamental to effective data extraction.

Why CSS Selectors Matter for Scraping

CSS selectors were designed for styling web pages. But the same precision that lets designers target specific elements makes selectors perfect for data extraction. You can pinpoint exactly which div contains product prices, which span holds author names, or which anchor links lead to detail pages.

Performance. CSS selectors are fast. Browsers optimize selector matching heavily since it affects page rendering. Some benchmarks show CSS selectors running 23% faster than equivalent XPath expressions.

Readability. A selector like .product-title is immediately understandable. The XPath equivalent //div[@class='product-title'] requires more mental parsing.

Browser integration. You can test CSS selectors directly in browser developer tools using document.querySelectorAll('your-selector'). This makes debugging fast and interactive.

Wide support. Every modern scraping tool, from Scrapy to Puppeteer to no-code platforms, supports CSS selectors natively.

Basic Selectors

These foundational selectors target elements by their most common attributes.

Universal Selector

The asterisk (*) selects every element on the page. Rarely useful for scraping since you typically want specific data, not everything.

Use case: Debugging selector scope. div.container * shows all descendants of a container.

Type Selector

Element type selectors like p, div, a, and table select all elements of a specific HTML tag type. Simple but often too broad for precise extraction.

Use case: Extracting all links from a page with the anchor selector, or all paragraphs with p.

Class Selector

The dot notation (.classname) selects elements with a specific class attribute. This is your workhorse selector for most scraping tasks.

Use case: Product names are often in .product-title, prices in .price or .amount.

Example: For HTML like span with class="price" containing $29.99, use the selector .price.

ID Selector

The hash notation (#idname) selects the element with a specific ID. IDs should be unique on a page, making this highly precise.

Use case: Targeting specific page sections like #product-details or #reviews-container.

Gotcha: Many dynamic sites generate random IDs like #react-root-7a3b2c. These break between page loads. Avoid basing extraction on unstable IDs.

Multiple Classes

Chaining classes like .card.featured selects elements that have both classes. No space between class names.

Use case: Distinguishing featured products (.product.featured) from regular ones (.product).

Combinator Selectors

Combinators express relationships between elements. They're essential for navigating HTML hierarchies.

Descendant Combinator (Space)

Separating selectors with a space like div.products span.price selects elements that are descendants (children, grandchildren, etc.) of another element. The most common combinator in scraping.

Use case: Prices within a product container: .product-card .price

Child Combinator (>)

The greater-than symbol like ul > li selects only direct children, not deeper descendants. More precise than the descendant combinator.

Use case: Top-level list items only, ignoring nested lists: ul.menu > li

Adjacent Sibling Combinator (+)

The plus sign like h2 + p selects an element immediately following another element (same parent level).

Use case: The first paragraph after each heading: h2 + p

General Sibling Combinator (~)

The tilde like h2 ~ p selects all siblings following an element, not just the immediate one.

Use case: All paragraphs after a heading in the same container: h2 ~ p

Attribute Selectors

Attribute selectors target elements based on their HTML attributes. Powerful for scraping because data attributes often contain stable identifiers.

Has Attribute

Square brackets like [data-price] select elements that have the attribute, regardless of value.

Use case: All elements with pricing data: [data-price]

Exact Match

Using equals like [data-testid="product-title"] selects elements where the attribute exactly matches the value.

Use case: Target test IDs that developers add for automation: [data-testid="add-to-cart"]

Pro tip: data-testid, data-qa, and data-test attributes are goldmines for scraping. They're added for testing purposes and rarely change, making selectors more stable than class-based ones.

Starts With (^=)

The caret equals like [href^="https://"] selects elements where the attribute value starts with the specified string.

Use case: External links only: a[href^="https://"]. Or product-related classes: [class^="product-"]

Ends With ($=)

The dollar equals like [href$=".pdf"] selects elements where the attribute value ends with the specified string.

Use case: All PDF download links: a[href$=".pdf"]. All PNG images: img[src$=".png"]

Contains (*=)

The asterisk equals like [href*="product"] selects elements where the attribute value contains the specified substring anywhere.

Use case: Links containing "product" in the URL: a[href*="product"]

Caution: Contains is the loosest match. [class*="price"] matches "price", "price-discount", "old-price", and "my-pricing-table". Use more specific patterns when possible.

Word Match (~=)

The tilde equals like [class~="featured"] selects elements where the attribute contains the specified word in a space-separated list.

Use case: An element with class="product featured sale" is matched by [class~="featured"] but not by [class*="feature"] targeting partial strings.

Attribute Selector Quick Reference

| Pattern | Meaning | Example |

|---|---|---|

| [attr] | Has attribute | [data-id] |

| [attr=value] | Exact match | [type="email"] |

| [attr^=value] | Starts with | [href^="https"] |

| [attr$=value] | Ends with | [src$=".jpg"] |

| [attr*=value] | Contains | [class*="btn"] |

| [attr~=value] | Word in list | [class~="active"] |

Pseudo-Class Selectors

Pseudo-classes select elements based on their state or position. Critical for extracting specific items from lists.

Position-Based

Selectors like li:first-child, li:last-child, li:nth-child(3), li:nth-child(odd), and li:nth-child(even) target elements by position.

Use cases:

- First item in a list: :first-child

- Last item: :last-child

- Third item specifically: :nth-child(3)

- Every other row: :nth-child(odd) or :nth-child(even)

- Every third item: :nth-child(3n)

- First item of every group of 3: :nth-child(3n+1)

Example: Extracting the top 3 search results: .search-result:nth-child(-n+3)

Type-Based Position

Selectors like p:first-of-type, p:last-of-type, and p:nth-of-type(2) work like position-based selectors but only count elements of the same type.

Use case: In a container with mixed elements, p:first-of-type selects the first paragraph, even if other elements come before it.

Negation

The :not() selector like .product:not(.sold-out) selects elements that don't match the inner selector.

Use case: Active products only: .product:not(.sold-out). Skip table headers: tr:not(:first-child)

Empty Elements

Selectors like td:empty select elements with no children (including text).

Use case: Detecting missing data in tables where empty cells indicate unavailable information.

Combining Selectors

You can combine multiple selectors for precise targeting.

Multiple Conditions (AND)

Chaining selectors without spaces like input[type="text"].email-field means the element must match all conditions.

Multiple Matches (OR)

Comma-separated selectors like .price, .cost, .amount match elements meeting any condition.

Use case: Extract prices labeled differently across pages: .price, .cost, .amount

Common Scraping Patterns

Product Listings

Common selectors include:

- Product cards: .product-card, .product-item, [data-testid="product"]

- Product titles: .product-card .title, .product-title, h2.product-name

- Prices: .price, .current-price, [data-price], span.amount

- Original/sale prices: .original-price, .was-price vs .sale-price, .discount-price

- Product links: .product-card a[href*="/product/"]

- Images: .product-card img, img.product-image

Table Data

Common selectors include:

- All data rows (skip header): table tbody tr or tr:not(:first-child)

- Specific columns: td:nth-child(1), td:nth-child(2), td:last-child

- Header cells: th, thead td

Navigation and Links

Common selectors include:

- Main navigation: nav a, .nav-menu a, header a

- Pagination: .pagination a, .pager a, a[rel="next"]

- Current page: .pagination .active, .current-page

- Breadcrumbs: .breadcrumb a, nav[aria-label="breadcrumb"] a

Article/Content Pages

Common selectors include:

- Titles: article h1, .post-title, .article-title

- Author: .author, .byline, [rel="author"]

- Date: time, .date, .published-date, [datetime]

- Content body: article p, .post-content p, .article-body

- Tags/categories: .tags a, .categories a, [rel="tag"]

Building Robust Selectors

Fragile selectors break when websites update. Here's how to build selectors that last.

Prefer Semantic Attributes

Fragile: Auto-generated class names like .css-1a2b3c or deep nesting like div > div > div > span.

Robust: Semantic selectors like .product-title or [data-testid="price"].

Auto-generated class names change with every build. Semantic names persist across updates.

Use Data Attributes When Available

Selectors targeting [data-testid="add-to-cart-button"], [data-qa="product-name"], or [data-product-id] are highly stable because developers add these for testing, and they're intentionally stable. A gift for scrapers.

Avoid Deep Nesting

Fragile: div.container > div.row > div.col-md-8 > div.card > div.card-body > h5.card-title

Robust: .card .card-title

Deep chains break when any intermediate element changes. Shorter selectors are more resilient.

Combine Multiple Anchors

Using patterns like .product-card[data-in-stock="true"] .price makes selectors more specific and less likely to match unintended elements.

Testing Selectors

Before building extraction rules, test selectors in your browser.

Browser Console

Open DevTools (F12), go to Console, and run document.querySelectorAll('.your-selector'). This returns a NodeList of matching elements. Check the count and inspect results.

Elements Panel

In DevTools Elements tab, press Ctrl+F (Cmd+F on Mac) and enter your CSS selector. The browser highlights matches and shows the count.

Verify Before Scraping

- Open the target page

- Test your selector in DevTools

- Confirm it matches the correct number of elements

- Check that matched elements contain the expected data

- Try on multiple pages to ensure consistency

CSS vs XPath: When to Use Each

CSS selectors handle most scraping needs, but XPath offers capabilities CSS lacks.

Use CSS when:

- Targeting by class, ID, or tag

- Matching attribute patterns

- Selecting based on element position

- Performance matters (CSS is typically faster)

Use XPath when:

- Selecting by text content: //div[contains(text(), 'In Stock')]

- Navigating to parent elements: //span[@class='price']/parent::div

- Complex conditional logic: //div[@class='product' and @data-available='true']

- Selecting by sibling count or complex relationships

AI-Powered Alternatives

Traditional scraping requires you to find and maintain exact selectors. When layouts change, selectors break.

AI-powered tools like Lection take a different approach. Instead of targeting specific HTML elements, AI recognizes data visually: "that looks like a price," "those are product names," "this repeating pattern is a listing."

Advantages:

- Automatic adaptation to layout changes

- No selector maintenance

- Works on sites with obfuscated class names

- Extracts data from complex, JavaScript-heavy pages

When to use:

- Rapid prototyping when you need data fast

- Sites that change frequently

- No-code extraction without selector expertise

- Dynamic sites where traditional selectors fail

Troubleshooting Common Issues

Selector Matches Nothing

Problem: Your selector returns 0 results.

Solutions:

- Check spelling and case sensitivity

- Verify the page has fully loaded (JavaScript may add elements later)

- Check if content is inside an iframe (need different approach)

- Confirm the element exists on this page variant

Selector Matches Too Many Elements

Problem: You wanted 10 products but selected 50 elements.

Solutions:

- Add more specific qualifiers: .product-grid .product-card instead of .product-card

- Use :not() to exclude unwanted matches

- Combine with attribute selectors for precision

Data Is Empty or Wrong

Problem: Selector matches but extracted text is empty or incorrect.

Solutions:

- Content may be in a child element: try .container .text instead of .container

- Text might be in an attribute: check data-value, title, alt

- Content loaded via JavaScript after initial render

Selector Worked Yesterday, Fails Today

Problem: Previously working extraction broke.

Solutions:

- Website updated their layout

- Check if class names changed (especially auto-generated ones)

- Look for stable attributes like data-testid

- Consider AI-powered tools for automatic adaptation

Conclusion

CSS selectors are the foundation of web scraping. Whether you're writing Python scripts, configuring browser automation, or using no-code tools, selector fluency determines extraction success.

The key principles:

- Start simple, add specificity as needed

- Prefer stable attributes like semantic classes and data-* attributes

- Test in browser before building extraction rules

- Keep selectors short for resilience

For sites that change frequently or when you'd rather skip selector maintenance entirely, AI-powered tools like Lection handle element targeting automatically.

Ready to put these selectors to work? Install Lection and start extracting structured data from any website.