You send a request.The server sends back a number.That three - digit code determines whether your scraper gets data or gets blocked.

HTTP status codes are the language servers use to communicate with clients.For web scrapers, understanding these codes is the difference between successful data extraction and frustrating debugging sessions.A 200 means success.A 403 means you've been detected. A 429 means slow down. A 503 might mean the server is overloaded, or it might mean you've been deliberately blocked.

This guide covers every HTTP status code relevant to web scraping, explains what causes each one, and provides practical solutions.Bookmark this page.You'll reference it often.

How HTTP Status Codes Work

When your scraper requests a webpage, the server responds with a status code in the response header.These codes fall into five classes:

| Code Range | Category | Meaning for Scrapers |

|---|---|---|

| 1xx | Informational | Request received, processing continues |

| 2xx | Success | Request succeeded, data returned |

| 3xx | Redirection | Follow another URL to get the data |

| 4xx | Client Error | Your request is the problem |

| 5xx | Server Error | The server has a problem |

For scrapers, 2xx codes are the goal. 3xx codes are navigation instructions. 4xx and 5xx codes are obstacles that require specific handling strategies.

Success Codes(2xx)

These are the codes you want to see.They indicate your request succeeded.

200 OK

** What it means:** The request succeeded and the response body contains the requested data.

** For scrapers:** This is the ideal outcome.Your request was accepted, and you received the HTML, JSON, or other content you requested.

** Gotcha:** A 200 doesn't guarantee you got useful data. Some sites return a 200 with a CAPTCHA page, a login wall, or an empty container where data should be. Always validate the actual content, not just the status code.

201 Created

** What it means:** The request succeeded and a new resource was created.

** For scrapers:** Less relevant for data extraction, but you might see this when automating form submissions or account creation.

204 No Content

** What it means:** The request succeeded but there's no content to return.

** For scrapers:** You might encounter this on endpoints that perform actions rather than return data.If you expected content and got 204, check whether you're hitting the right endpoint.

Redirection Codes(3xx)

These codes tell your client to look elsewhere for the content.

301 Moved Permanently

** What it means:** The requested resource has permanently moved to a new URL.

** For scrapers:** Update your URLs.The Location header contains the new address. Most HTTP libraries follow redirects automatically, but if you're building scraped URL databases, store the final URL after redirects.

Example scenario: You're scraping product pages and a website migrates from example.com/products/123 to example.com/p/abc123. Your old URLs will 301 to the new structure.

302 Found (Temporary Redirect)

What it means: The resource temporarily lives at a different URL.

For scrapers: Follow the redirect but keep your original URL. The content may return to the original location later.

Common cause: Geographic redirects. A US IP might be redirected to example.com/us/ while a UK IP goes to example.com/uk/.

303 See Other

What it means: The response to your request is available at another URL, and you should GET that URL.

For scrapers: Most commonly seen after form submissions. The server processes your POST request and redirects you to a results page.

307 Temporary Redirect

What it means: Like 302, but explicitly requires using the same HTTP method for the redirect.

For scrapers: If you POST to a URL and get 307, you must POST (not GET) to the new location. This matters for form automation.

308 Permanent Redirect

What it means: Like 301, but requires using the same HTTP method for the redirect.

For scrapers: Similar to 307, but permanent. Update your stored URLs and preserve the HTTP method.

Client Error Codes (4xx)

These codes indicate problems with your request. For scrapers, 4xx codes often signal detection and blocking.

400 Bad Request

What it means: The server cannot process your request due to malformed syntax.

For scrapers:

Common causes:

- Malformed JSON in POST body

- Invalid URL encoding

- Missing required headers

- Corrupt cookie values

Solutions:

- Validate your request format before sending

- Check Content-Type headers match your body format

- URL-encode special characters in query parameters

- Clear and refresh session cookies

401 Unauthorized

What it means: Authentication is required and either wasn't provided or failed.

For scrapers: You're trying to access protected content without valid credentials.

Solutions:

- Include valid API keys or tokens in headers

- Handle login flows to obtain session cookies

- Check if credentials have expired

- Verify the authentication scheme (Bearer, Basic, etc.)

Example: Scraping a user's private dashboard requires first authenticating. Capture the session cookies after login and include them in subsequent requests.

403 Forbidden

What it means: The server understood your request but refuses to authorize it.

For scrapers: This is the most common "you've been blocked" signal. The server has determined you're not allowed to access this resource.

Common causes:

- Your IP address has been flagged

- Your User-Agent header reveals you as a bot

- Missing or suspicious headers (Referer, Accept-Language)

- Request rate triggered anti-bot systems

- Geographic restrictions on content

- Browser fingerprint detection

Solutions:

Rotate IP addresses. Use residential proxies that distribute requests across thousands of IP addresses. This is the most effective mitigation for IP-based blocking.

Mimic real browsers. Set a realistic User-Agent string that matches current browser versions. Include standard headers like Accept, Accept-Language, Accept-Encoding, and Connection.

Handle cookies and sessions. Properly manage cookies to appear as a continuous user session rather than a series of isolated requests.

Add random delays. Space requests 3-10 seconds apart with random variation. Predictable timing patterns trigger detection.

Use headless browsers. For sites with JavaScript challenges, tools like Puppeteer or Playwright render pages like real browsers. AI-powered tools like Lection handle this automatically.

Check robots.txt. While not legally binding, respecting robots.txt directives can prevent blocks. Some sites actively block IPs that ignore their robots.txt.

404 Not Found

What it means: The requested resource doesn't exist at this URL.

For scrapers: The page has been removed, the URL is wrong, or the resource never existed.

Solutions:

- Verify your URL construction logic

- Update your URL database when you encounter 404s

- Check if the site restructured its URLs (look for 301 redirects elsewhere)

- Handle 404s gracefully, don't retry infinitely

Edge case: Some sites return 404 for resources that exist but require authentication. Check if the same URL returns different codes with and without login cookies.

405 Method Not Allowed

What it means: The HTTP method you used isn't allowed for this resource.

For scrapers: You tried to POST to a GET-only endpoint or vice versa.

Solutions:

- Check the endpoint documentation or observe real browser behavior

- The

Allowresponse header lists permitted methods - API endpoints often require specific methods for different operations

406 Not Acceptable

What it means: The server cannot produce a response matching your Accept headers.

For scrapers: Your Accept header requests a format the server doesn't support.

Solutions:

- Use broader Accept headers like

text/html,application/xhtml+xml,*/* - Remove restrictive Accept headers and see what the server returns by default

407 Proxy Authentication Required

What it means: Your proxy server requires authentication.

For scrapers: If you're using proxies, you need to provide credentials.

Solutions:

- Configure proxy authentication in your HTTP client

- Check proxy provider documentation for authentication format

- Verify proxy credentials haven't expired

408 Request Timeout

What it means: The server timed out waiting for your request.

For scrapers: Your connection was too slow or you didn't send the complete request in time.

Solutions:

- Check network connectivity

- Reduce request payload size

- Increase timeout settings on your client

410 Gone

What it means: The resource existed but has been permanently removed.

For scrapers: Unlike 404, which might be temporary, 410 explicitly means the content is gone forever.

Solutions:

- Remove this URL from your scraping targets

- Don't retry, the content won't return

418 I'm a Teapot

What it means: Technically a joke from RFC 2324 (Hyper Text Coffee Pot Control Protocol).

For scrapers: Some anti-bot systems return this as a deliberate "we know you're a bot" signal. It's functionally equivalent to a 403.

422 Unprocessable Entity

What it means: The server understands your request format but cannot process the contained instructions.

For scrapers: Common with APIs when your request is syntactically correct but semantically wrong.

Solutions:

- Validate request payload structure

- Check required fields and data types

- Review API documentation for valid values

429 Too Many Requests

What it means: You've exceeded the rate limit. The server is telling you to slow down.

For scrapers: This is the most explicit "you're scraping too fast" signal, and the easiest to handle properly.

Common causes:

- Requests per second exceeds the threshold

- Too many requests from one IP address

- Daily or hourly limits exceeded

- Concurrent request count too high

Solutions:

Respect Retry-After. The response often includes a Retry-After header specifying how long to wait before retrying. Honor this value.

Implement exponential backoff. After each 429, wait progressively longer before retrying: 1 second, then 2, then 4, then 8. This prevents hammering a recovering server.

Throttle proactively. Don't wait for 429s. Implement request delays from the start. 2-5 seconds between requests is reasonable for most sites.

Rotate IP addresses. Distribute requests across multiple IPs so no single address triggers rate limits.

Use scheduled cloud scraping. Tools like Lection can spread extraction over time, running in the background at sustainable rates.

Cache aggressively. Don't re-request data you already have. Store results and only fetch new or updated content.

Server Error Codes (5xx)

These codes indicate the server failed to fulfill your request due to its own problems.

500 Internal Server Error

What it means: Something went wrong on the server, but it's not telling you what.

For scrapers: The request might have triggered a bug, or the server is genuinely broken.

Solutions:

- Retry after a delay (the issue might be temporary)

- Check if your request is unusual in a way that might trigger server bugs

- Verify the website works in a normal browser

502 Bad Gateway

What it means: A gateway or proxy server received an invalid response from an upstream server.

For scrapers: Often indicates infrastructure issues, CDN problems, or server crashes.

Solutions:

- Retry after 30-60 seconds

- If persistent, the site may be down

- Check downdetector-style services for the target site

503 Service Unavailable

What it means: The server is temporarily unable to handle requests.

For scrapers: This can mean legitimate overload, or it can be a deliberate anti-scraping measure.

Common causes:

- Server maintenance

- Traffic spike overwhelming capacity

- Deliberate blocking disguised as unavailability

- Backend service failures

Solutions:

Check if it's real. Try accessing the site in a normal browser. If the site works, the 503 is targeted at your scraper.

Retry with backoff. If it's legitimate downtime, implement exponential backoff and retry.

Rotate infrastructure. If it's targeted blocking, switch IPs, User-Agents, and other fingerprintable elements.

Use cloud scraping. Services like Lection route requests through residential IPs, reducing the chance of targeted 503s.

504 Gateway Timeout

What it means: A gateway or proxy didn't receive a timely response from the upstream server.

For scrapers: The target server is slow or unresponsive.

Solutions:

- Retry after a delay

- Reduce request frequency (you might be contributing to the slowdown)

- Check if the site is under heavy load

520-530 Cloudflare Errors

Cloudflare uses custom status codes in the 52x range:

| Code | Meaning |

|---|---|

| 520 | Unknown error (Cloudflare couldn't understand the origin response) |

| 521 | Web server is down |

| 522 | Connection timed out |

| 523 | Origin is unreachable |

| 524 | A timeout occurred |

| 525 | SSL handshake failed |

| 526 | Invalid SSL certificate |

| 527 | Railgun error |

| 530 | DNS resolution error |

For scrapers: These indicate infrastructure issues between Cloudflare and the origin server. Retry with exponential backoff. If the site is behind Cloudflare's anti-bot protection, you may also encounter JavaScript challenges before these codes.

Building Robust Error Handling

Your scraper should handle status codes programmatically. Here's a strategy:

Retry Strategy by Code

| Code | Retry | Strategy |

|---|---|---|

| 200 | No | Success, process data |

| 301/302 | Auto | Follow redirect |

| 400 | No | Fix request format |

| 401 | Maybe | Refresh credentials |

| 403 | Maybe | Rotate IP, update headers |

| 404 | No | Remove from queue |

| 429 | Yes | Wait per Retry-After |

| 500 | Yes | Exponential backoff |

| 502 | Yes | Wait 30-60 seconds |

| 503 | Yes | Exponential backoff |

| 504 | Yes | Exponential backoff |

Logging Best Practices

Log every non-200 response with:

- Timestamp

- URL requested

- Status code received

- Response headers (especially Retry-After, Location)

- Request headers you sent

- Response body (first 1000 characters)

This data helps you debug patterns. If 60% of your 403s come from one IP, you know that IP is burned. If 429s spike at certain times, you can adjust your schedule.

Common Scraping Scenarios

Scenario: E-commerce Product Extraction

You're scraping 10,000 product pages from an e-commerce site.

Expected codes:

- 200: Product exists, data returned

- 301: Product URL changed

- 404: Product removed from catalog

- 429: Scraping too fast

- 403: Detected as bot

Strategy:

- Start slow (2-second delays)

- Handle 301s by updating your URL list

- Track 404s to identify removed products

- On 429, pause all requests for Retry-After duration

- On 403, rotate IP and reduce request rate

Scenario: API Data Collection

You're pulling data from a third-party API with rate limits.

Expected codes:

- 200: Success

- 401: Token expired

- 429: Rate limit hit

- 500: API server issue

Strategy:

- Track rate limit headers (

X-RateLimit-Remaining) - Refresh tokens proactively before expiry

- Implement request queuing to stay under limits

- Cache responses to reduce duplicate requests

Scenario: News Article Aggregation

You're monitoring 500 news sources for new articles.

Expected codes:

- 200: Content retrieved

- 304: Not Modified (content unchanged)

- 403: Site blocks scrapers

- 503: Site under load

Strategy:

- Use

If-Modified-Sinceheaders to get 304s for unchanged content - Rotate user agents to avoid 403s

- Prioritize sites with less blocking

- Accept that some sources will be unavailable

Tools That Handle Status Codes Automatically

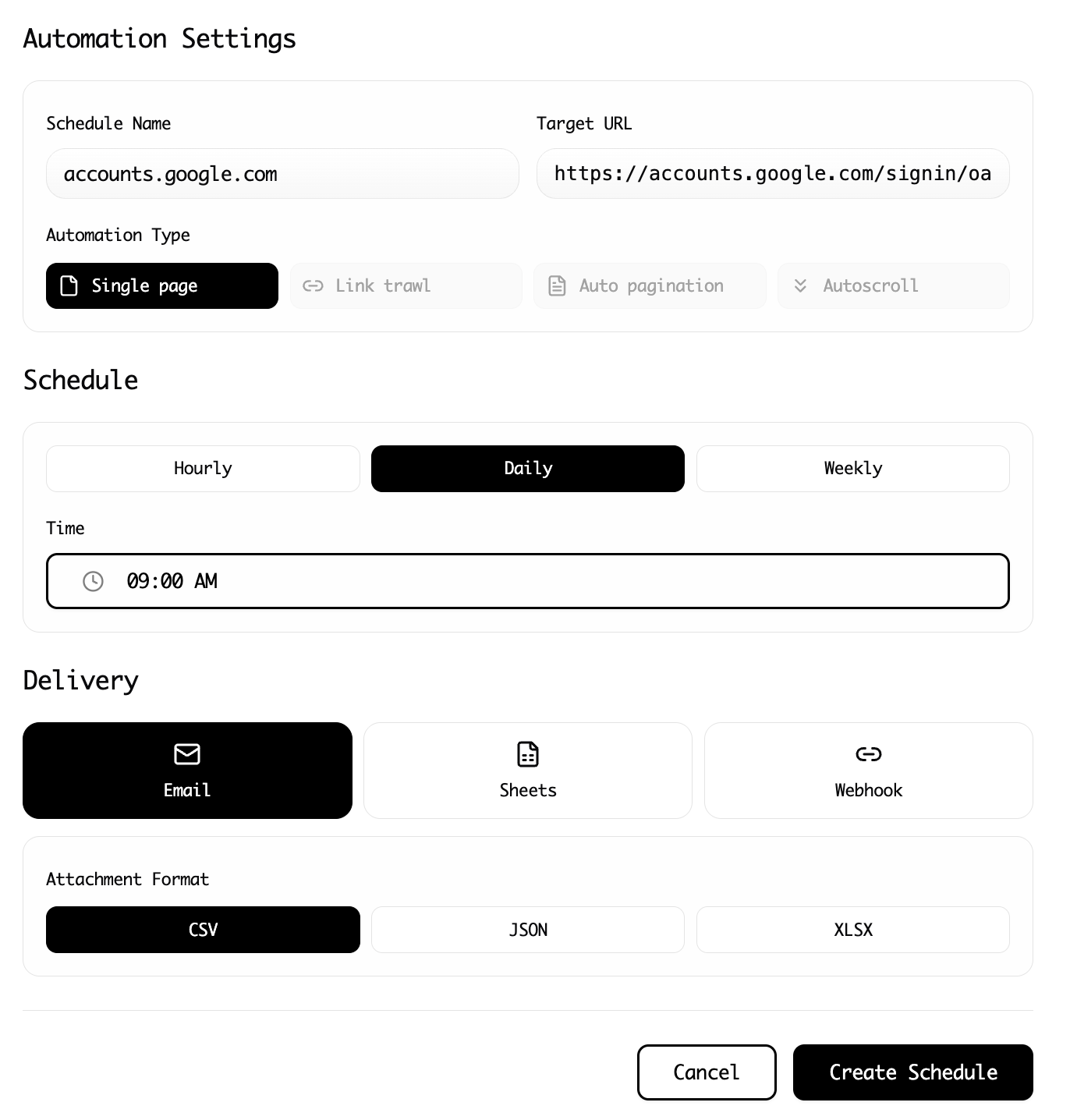

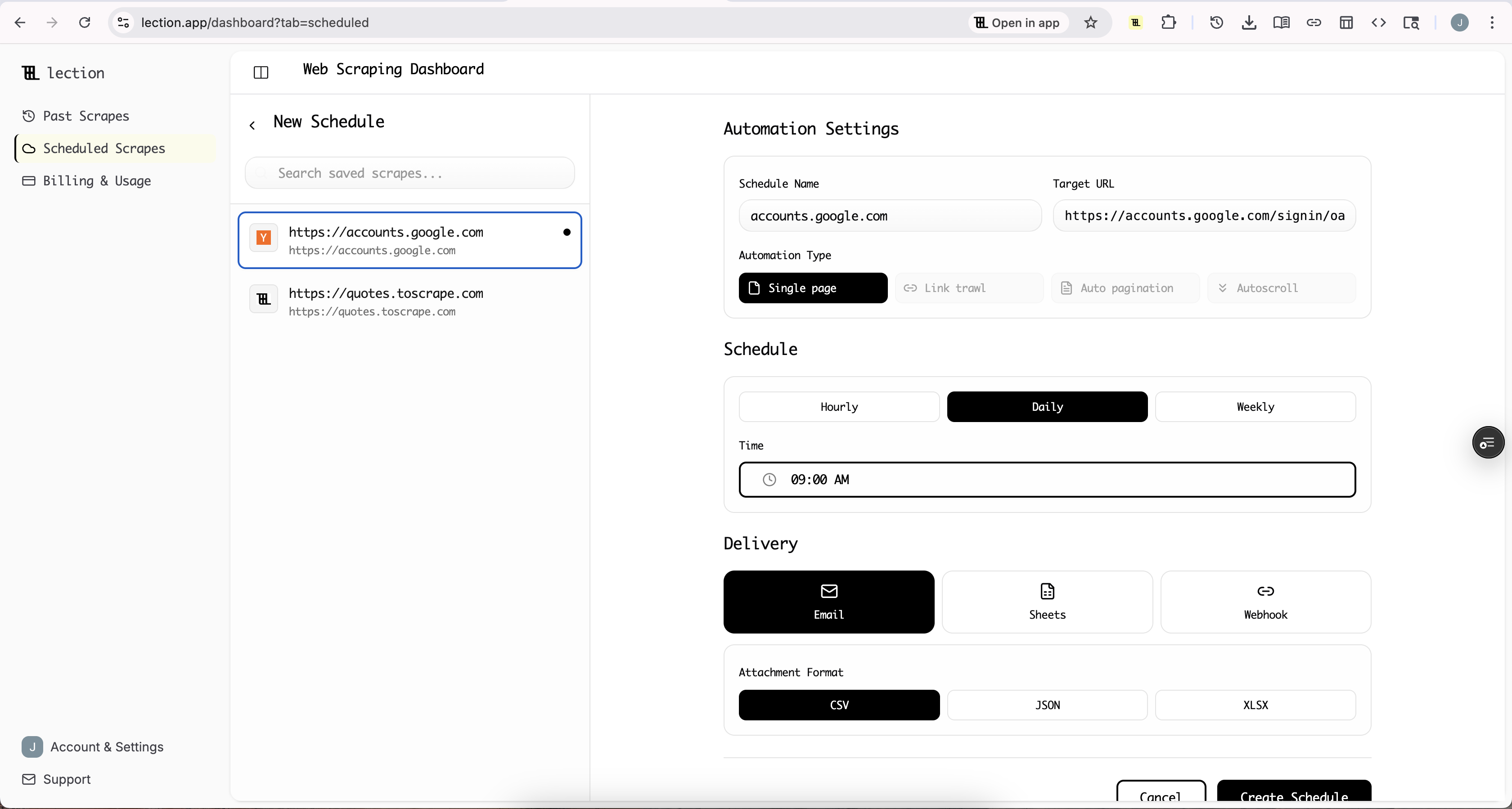

Building robust error handling is engineering work. If you'd rather extract data than debug HTTP, no-code tools handle this complexity for you.

Lection manages status code responses automatically:

- Follows redirects transparently

- Retries failed requests with appropriate delays

- Rotates IPs to avoid rate limits and blocks

- Runs in the cloud so temporary 503s don't require your attention

For APIs, established SERP providers and data services handle infrastructure concerns, returning only successful data or clear error messages.

Conclusion

HTTP status codes are the feedback mechanism between your scraper and target servers. Understanding what each code means, and what to do about it, transforms frustrating debugging sessions into systematic problem-solving.

Key takeaways:

- 200 is success, but validate content (CAPTCHAs can hide behind 200s)

- 403 means detection, rotate infrastructure and slow down

- 429 is rate limiting, respect Retry-After and implement backoff

- 503 can be legitimate or targeted, test in a real browser to know which

- Log everything for pattern analysis

Ready to skip the error handling and just get your data? Install Lection and extract structured data without debugging status codes.