If you have ever tried to extract data from websites at scale, you have probably run into "Access Denied" pages, mysterious CAPTCHAs, or requests that simply hang forever. What you are experiencing is the cat-and-mouse game between bots and the websites trying to stop them.

Understanding how websites detect and block automated traffic is not just academically interesting. It is essential knowledge for anyone doing web scraping, building integrations, or even trying to troubleshoot why their automation scripts suddenly stopped working.

This guide breaks down the major anti-bot techniques used in 2026, explains how each one works under the hood, and discusses ethical approaches to data extraction that respect both technical constraints and legal boundaries.

Why Websites Block Scrapers

Before diving into the techniques, it helps to understand the motivations. Websites invest in anti-bot measures for several legitimate reasons:

Protecting server resources. Bots can generate enormous traffic. A single misconfigured scraper can send thousands of requests per minute, potentially degrading performance for legitimate users or even causing outages.

Preventing competitive intelligence. E-commerce sites spend significant money on pricing optimization. They do not want competitors automatically monitoring every price change in real-time.

Protecting user data. Social networks, review sites, and job boards contain personal information. Mass scraping of profiles, reviews, or contact details raises privacy concerns.

Preserving content value. Publishers and content sites monetize through advertising. Scrapers that extract and republish content without ads undermine their business model.

Stopping malicious activity. Not all bots are benign. Credential stuffing attacks, spam bots, and fraud attempts all use automated traffic.

Understanding these motivations helps frame why certain techniques exist and informs ethical approaches to data extraction.

Technique 1: CAPTCHAs

CAPTCHAs remain the most visible anti-bot defense. The name stands for "Completely Automated Public Turing test to tell Computers and Humans Apart."

Traditional CAPTCHAs

The original CAPTCHAs asked users to read distorted text and type it into a form field. These worked well initially but became less effective as optical character recognition improved.

reCAPTCHA v2 (Checkbox)

Google's "I'm not a robot" checkbox analyzes your behavior before and during the click. It tracks mouse movements, scroll patterns, and typing cadences. If everything looks human, you pass immediately. If something seems off, you get an image selection challenge.

reCAPTCHA v3 (Invisible)

The invisible version runs constantly in the background, scoring every visitor on a scale from 0.0 (definitely a bot) to 1.0 (definitely human). Site owners set thresholds for different actions. A low score might block checkout but allow browsing.

hCaptcha

An alternative to Google's reCAPTCHA that works similarly but positions itself as more privacy-focused. It has gained adoption among sites concerned about sending data to Google.

Specialized Challenges

Some sites use custom challenges based on their domain. Dating sites might require identifying photos of real faces versus AI-generated ones. Ticketing sites might ask questions about the event being purchased.

Technique 2: Rate Limiting

Rate limiting restricts how many requests a single source can make within a time window.

IP-Based Limiting

The simplest approach tracks requests per IP address. Exceed the threshold (perhaps 100 requests per minute) and subsequent requests get blocked or throttled.

Residential proxies. Scrapers counter IP blocks by rotating through pools of IP addresses. Residential proxies are particularly effective because they appear to be regular home internet connections.

IPv6 rotation. Some providers offer vast pools of IPv6 addresses, making it economically feasible to use a fresh IP for every request.

Account-Based Limiting

For authenticated sections, sites limit requests per account rather than per IP. This is harder to circumvent since creating accounts costs time and may require verification.

Endpoint-Specific Limits

APIs typically have stricter limits on expensive operations. Searching might allow 60 requests per minute while login attempts are limited to 5 per minute.

Dynamic Throttling

Sophisticated systems adjust limits based on current server load, the value of the content being accessed, and the reputation of the requester.

Technique 3: Browser Fingerprinting

Fingerprinting collects dozens of attributes about your browser and device to create a unique identifier.

Screen and window properties. Resolution, color depth, viewport size, device pixel ratio, and available screen dimensions.

Browser capabilities. Installed plugins, supported MIME types, WebGL renderer, canvas fingerprint, and audio context fingerprint.

System information. Time zone, language preferences, installed fonts, and whether cookies and JavaScript are enabled.

Hardware indicators. Number of CPU cores, device memory, GPU renderer string, and WebGL capabilities.

Canvas fingerprinting. Websites draw invisible graphics and measure subtle rendering differences between devices.

Audio fingerprinting. Similar technique using the Web Audio API to detect differences in audio processing.

TCP/IP fingerprinting. Server-side analysis of connection characteristics that reveal operating system and network stack details.

The combination of these attributes creates a near-unique fingerprint. Research has shown that fingerprints can be unique among millions of browsers.

Why Scrapers Get Caught

Automated browsers often leak their nature through fingerprinting:

WebDriver detection. Browsers controlled by Selenium, Puppeteer, or Playwright expose JavaScript properties like navigator.webdriver that real browsers do not have.

Headless browser signatures. Headless Chrome and Firefox have subtle differences in how they render content, handle fonts, or report screen dimensions.

Inconsistent environments. A claimed "Windows Chrome" browser running with Linux-specific behaviors, missing expected plugins, or having impossible hardware capabilities triggers detection.

Technique 4: Behavioral Analysis

Beyond static fingerprints, sites analyze how visitors interact with pages.

Mouse Movement Patterns

Humans move the mouse in natural curves with slight variations. Bots often move in straight lines or teleport the cursor directly to click targets.

Scroll Behavior

People scroll irregularly, pausing at interesting content. Automated scrolling tends to be smooth and consistent, often scrolling the entire page at once.

Click Patterns

Humans click with slight position variations and timing irregularities. Bot clicks are often perfectly centered on elements with consistent timing.

Navigation Flow

Real users browse somewhat unpredictably, clicking links, going back, and wandering. Bots often follow perfectly systematic patterns like depth-first crawling of every link.

Time on Page

Bots that scrape instantly look different from humans who spend time reading content.

Technique 5: Honeypots

Honeypots are traps designed to catch automated systems by tempting them into actions that humans would never take.

Hidden Elements

The simplest honeypot is a hidden form field or link. Using CSS, the element is made invisible to humans (using display:none or positioned off-screen). Humans cannot interact with elements they cannot see, but naive scrapers following all links or filling all forms will interact with the trap.

Fake Data

Some sites present fake content visible only to scrapers. If the fake content appears in a scraper's output (or later shows up published elsewhere), the site knows exactly which requests corresponded to scraping.

Link Traps

Pages might contain links to special URLs that serve only to detect bots. Clicking these links marks the session as automated and triggers blocking.

Timing Traps

Resources loaded immediately after the main page (before a human could possibly have scrolled to them) can indicate automated pre-fetching.

Technique 6: HTTP Header Analysis

Automated requests often differ from browser requests in subtle ways that servers can detect.

User-Agent Analysis

The User-Agent string identifies the browser and operating system. Common red flags include:

- Default library User-Agents (python-requests, curl, Java)

- Outdated browser versions that real users would have updated

- User-Agents that do not match other fingerprint details

- Rare or unusual User-Agent strings that receive disproportionate traffic

Missing or Unusual Headers

Real browsers send consistent sets of headers. Missing Accept-Language, unusual Accept-Encoding values, or HTTP/1.0 requests (when browsers use HTTP/2) can trigger flags.

TLS Fingerprinting

The way your client negotiates the HTTPS connection reveals information about its nature. JA3 and JA4 are fingerprinting methods that analyze TLS handshake patterns.

Different HTTP libraries produce different TLS fingerprints. A request claiming to be Chrome but presenting an obvious Python SSL signature immediately looks suspicious.

Technique 7: IP Reputation and Geolocation

Not all IP addresses are treated equally.

Known Bad IPs

Security services maintain lists of IP addresses associated with malicious activity, data centers, and proxy services. Requests from these IPs face greater scrutiny.

Datacenter Detection

Residential IP addresses get more trust than datacenter IPs. If your request comes from AWS, Google Cloud, or a known hosting provider, sites may apply stricter verification.

Geographic Inconsistency

A request claiming to be from a US Chrome browser but originating from an IP in Eastern Europe raises flags. Sophisticated systems check for consistency between claimed and actual location.

Behavioral History

IP addresses and fingerprints can accumulate reputation scores over time. Past suspicious activity from an identifier makes future requests more likely to face challenges.

Ethical Data Extraction Approaches

Understanding detection mechanisms is not about evading them at all costs. Ethical scrapers work within boundaries.

Respect robots.txt

The robots.txt file tells crawlers which parts of a site they should not access. While not legally binding, respecting it demonstrates good faith.

Use Official APIs

Many sites offer APIs specifically for programmatic access. These provide stable, documented access and are explicitly permitted. Always check if an API exists before scraping.

Request Reasonable Volumes

Even when scraping is permitted, overwhelming servers is not. Implement delays between requests and respect any rate limiting you encounter.

Identify Yourself

Consider using a descriptive User-Agent that identifies your scraper and provides contact information. Some sites whitelist legitimate scrapers.

Follow Terms of Service

Read and follow the site's terms of service. Some sites explicitly prohibit scraping; others have specific provisions for it.

How Lection Approaches Data Extraction

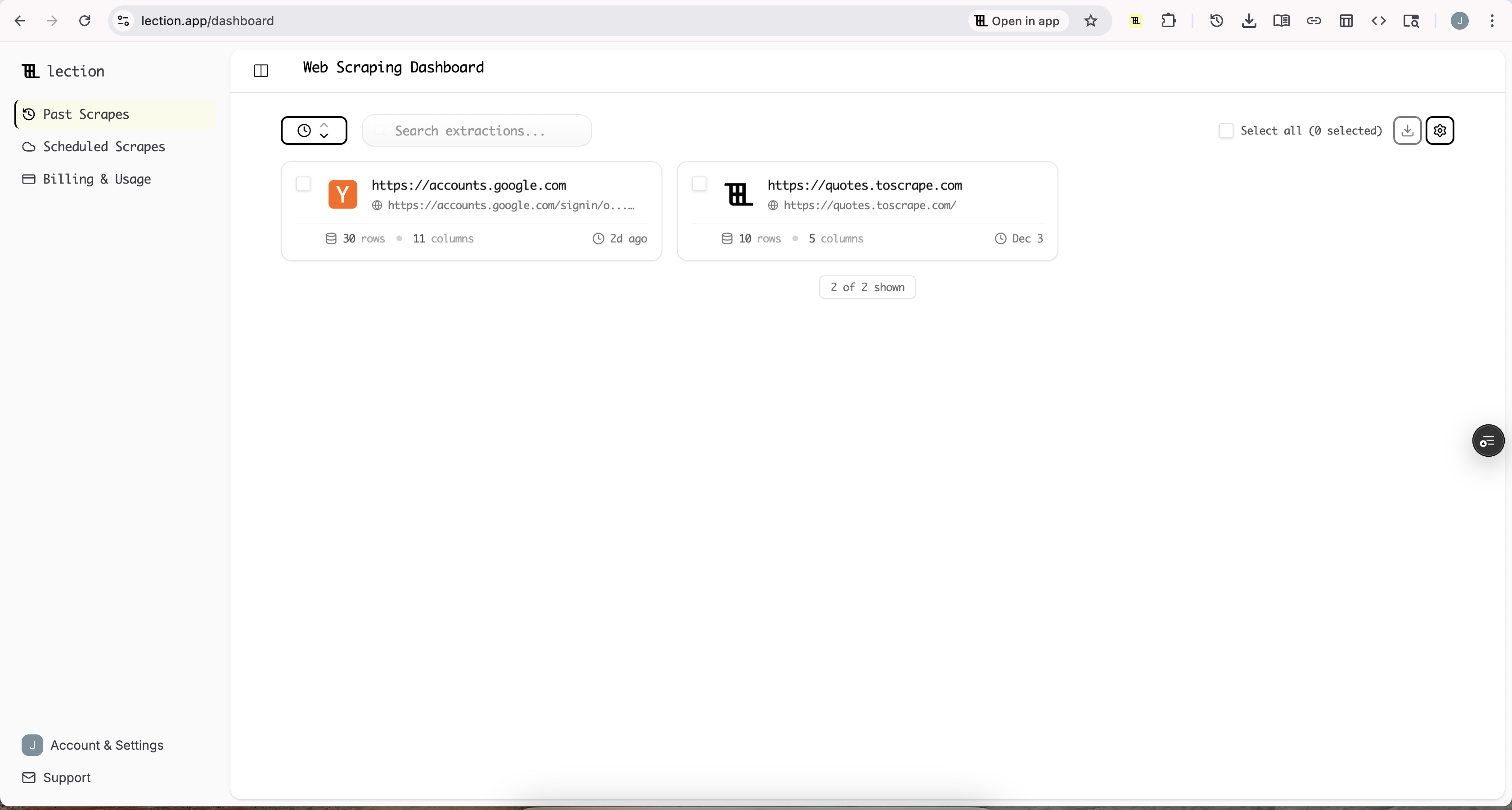

Tools like Lection take a fundamentally different approach to data extraction that works with these technical realities rather than against them.

Real browser rendering. Lection uses actual browser instances, not HTTP libraries. Pages load with JavaScript execution, just as they would for a regular visitor.

Natural interaction patterns. The AI-powered extraction navigates pages the way a human would, with natural timing and interaction patterns.

Respectful access. Lection operates at human-realistic speeds, not the thousands-of-requests-per-second pace that triggers alarms.

No coding required. Because Lection handles the technical complexity, you focus on describing what data you need rather than fighting detection systems.

The result is reliable data extraction that works on modern JavaScript-heavy sites without constantly breaking due to anti-bot measures.

Key Takeaways

Anti-bot measures exist for legitimate reasons, primarily protecting servers, users, and business interests. The major techniques include CAPTCHAs, rate limiting, fingerprinting, behavioral analysis, honeypots, header analysis, and IP reputation systems.

Understanding these systems helps you make informed decisions about data extraction approaches. The most sustainable path is working within boundaries: using APIs when available, respecting robots.txt, and choosing tools that extract data responsibly.

For sites that do permit extraction, tools like Lection provide a middle path: real browser-based access that works reliably without requiring you to become an expert in bypassing detection systems.