Data is the currency of the modern web, and in 2025, the demand for external data has never been higher. As businesses increasingly rely on real-time market intelligence, web scraping has evolved from a niche developer activity into a core business function.

We analyzed scraping patterns across thousands of extraction projects to identify the most targeted websites. The results paint a clear picture: the "Big Three"—Amazon, LinkedIn, and Zillow—continue to dominate, but new platforms are rapidly gaining traction as companies seek more granular social and local data.

This report breaks down the most scraped websites of 2025, the specific data points businesses are after, and the use cases driving this massive volume of automated extraction.

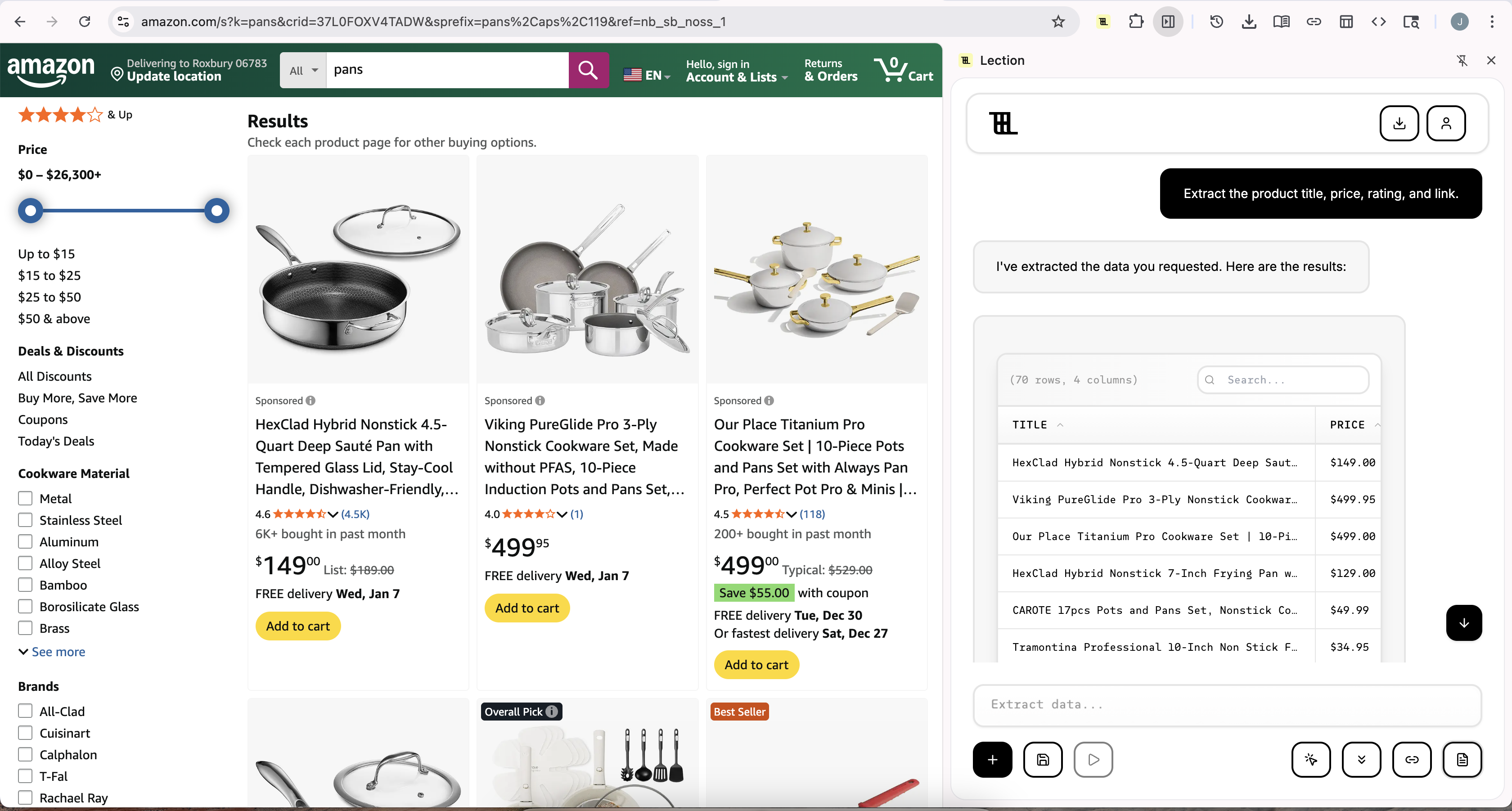

1. Amazon: The E-Commerce Pulse

Category: E-Commerce & Retail Primary Use Cases: Price Monitoring, Product Research, Review Analysis

Amazon remains the undisputed king of scraped data. It is not just a store; it is the global index of consumer goods pricing and sentiment. E-commerce brands, huge and small, scrape Amazon daily to stay competitive.

What’s Being Extracted?

- Pricing Data: Real-time price tracking to adjust own pricing strategies (dynamic pricing).

- Product Rankings: Monitoring "Best Seller" rank fluctuations to gauge market trends.

- Customer Reviews: Sentiment analysis on thousands of reviews to identify product flaws or feature requests.

- Stock Levels: Estimating competitor inventory based on availability data.

Why It Matters

For a brand selling headphones, knowing that a competitor lowered their price by 15% this morning is mission-critical. Scraping Amazon provides the immediate feedback loop that official API reports (which are often delayed or limited) cannot match.

2. LinkedIn: The B2B Goldmine

Category: Professional Network / B2B Primary Use Cases: Lead Generation, Recruitment, Sales Intelligence

LinkedIn is the world's largest up-to-date database of professional profiles and company firmographics. For B2B sales teams and recruiters, it is the single most valuable source of lead data.

What’s Being Extracted?

- Profile Data: Job titles, current companies, tenure, and skills.

- Company Data: Employee count, industry, headquarters location, and recent growth.

- Job Postings: analyzing hiring surges to predict company growth or technological shifts.

Why It Matters

Sales teams use this data to trigger outreach. A company hiring 50 new sales reps is a prime target for sales enablement software. A CTO changing jobs is a signal to re-engage an old account. Manual prospecting on LinkedIn is slow; automated extraction turns it into a scalable pipeline.

Note: LinkedIn has strict anti-bot measures. Successful extraction typically requires tools that mimic human behavior closely, like Lection, to scrape responsibly without triggering alarms.

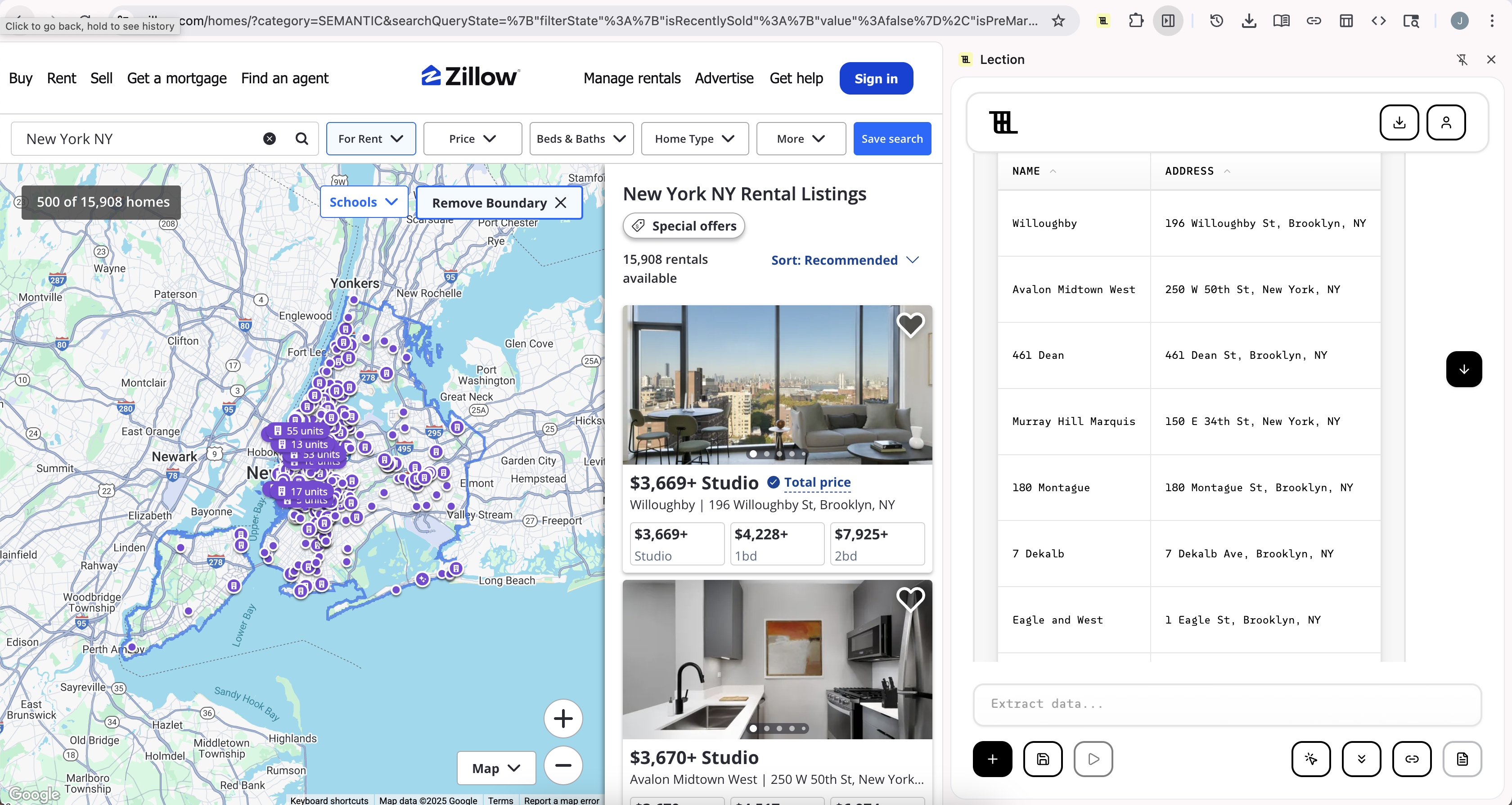

3. Zillow: The Real Estate Ledger

Category: Real Estate Primary Use Cases: Investment Analysis, Market Research, Lead Gen for Agents

Real estate investors and institutional buyers run on data. Zillow (and competitors like Redfin) aggregates listing data that is otherwise fragmented across hundreds of local MLS systems.

What’s Being Extracted?

- Property Details: Price, square footage, lot size, year built.

- Zestimate vs. List Price: Identifying undervalued properties.

- Days on Market: Spotting stale listings that might be open to lowball offers.

- Rent Estimates: Calculating potential yield for rental properties.

Why It Matters

In a volatile housing market, speed is everything. Investors scrape Zillow to flag properties meeting specific "buy box" criteria (e.g., 3 bed, 2 bath, under $400k, >5% rental yield) the moment they hit the market.

4. Google Maps: Hyper-Local Business Intel

Category: Local Business Directory Primary Use Cases: Local SEO, Sales Prospecting, Competitive Mapping

Google Maps has quietly become a scraping giant. It holds the most accurate index of local businesses, operating hours, and customer sentiment.

What’s Being Extracted?

- Business Contact Info: Phone numbers and websites for cold outreach.

- Review Counts & Ratings: Identifying local businesses with reputation management needs.

- Service Areas: Mapping where competitors operate.

Why It Matters

Agencies selling marketing services to restaurants, plumbers, or dentists thrive on this data. "Find me every gym in Chicago with a rating below 4.0" is a classic query that generates a highly targeted lead list for a reputation management agency.

5. Indeed: The Labor Market Barometer

Category: Jobs & Recruitment Primary Use Cases: Labor Market Analytics, Salary Benchmarking

While LinkedIn captures the professionals, Indeed captures the open roles. It is the raw feed of global hiring intent.

What’s Being Extracted?

- Salary Ranges: Benchmarking compensation against competitors.

- Skill Requirements: Identifying trending technologies (e.g., "React" vs. "Vue" mentions).

- Hiring Volume: Measuring economic health by industry or region.

Why It Matters

HR departments use this to ensure their offers are competitive. Hedge funds use hiring data as an alternative data source to predict company performance before earnings calls.

How to Scrape These Sites (Without Getting Blocked)

Scraping these major platforms presents two challenges: complexity and defense.

- Complexity: Sites like Zillow and Indeed use heavy JavaScript. They respond with empty pages to simple HTTP requests (like

curlor Python'srequests). You need a browser-based scraper to render the page content. - Defense: Amazon and LinkedIn employ sophisticated bot detection. If you request 100 pages in 10 seconds, you will be blocked.

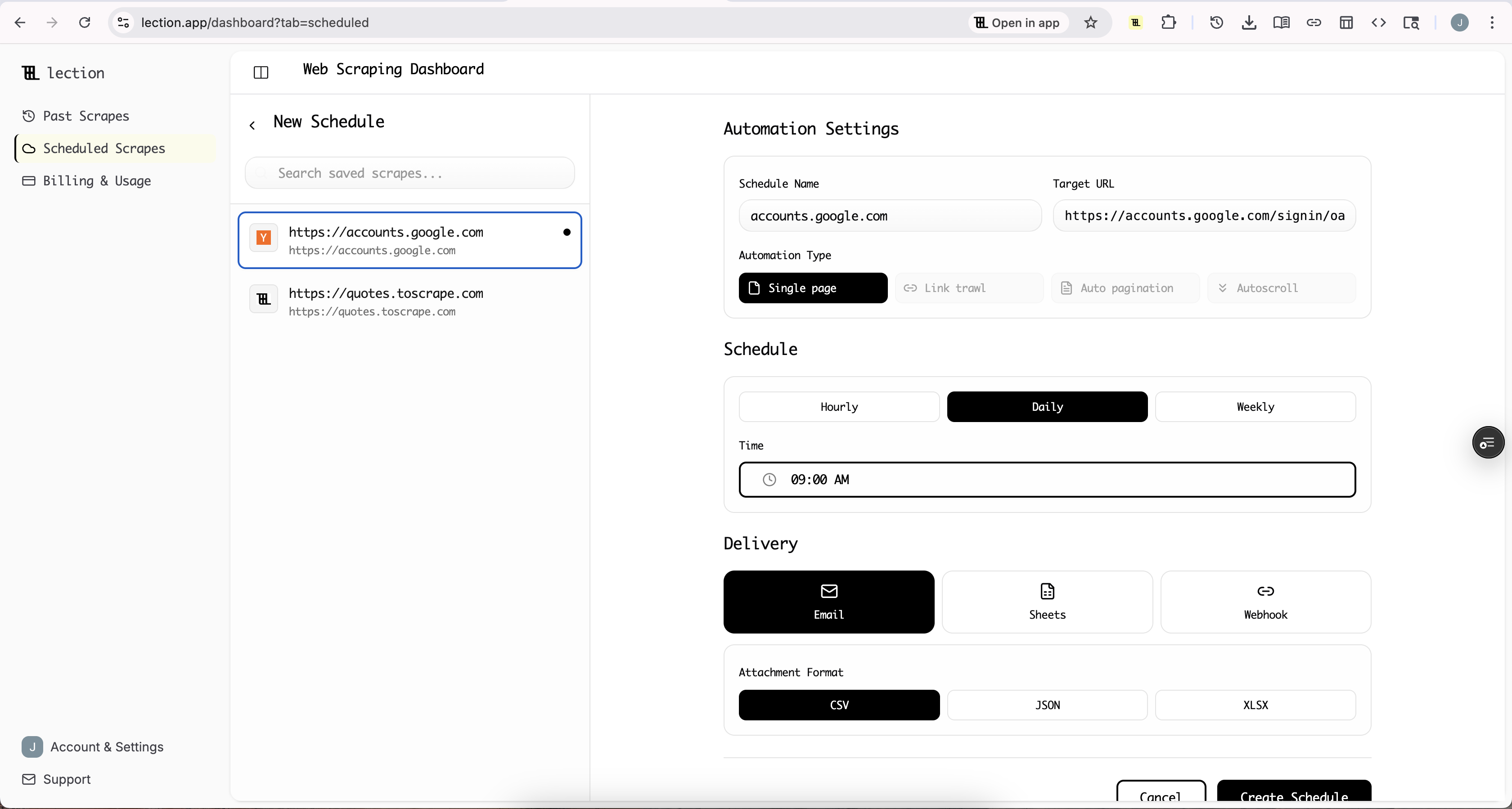

The Lection Approach

Tools like Lection solve both problems by running directly in your browser.

- It sees what you see: Because it uses your actual Chrome instance, it renders JavaScript perfectly.

- It acts like you: Lection mimics human scrolling and clicking, and can use your existing session cookies to access data behind login walls (like LinkedIn search results).

For large-scale projects, Lection's Cloud Scraping allows you to offload the work, rotating IPs automatically to extract thousands of records without interruption.

Legal and Ethical Note

Just because you can scrape a site doesn't always mean you should. When scraping high-profile targets:

- Respect robots.txt: Check the site's policy on automated access.

- Rate Limiting: Don't hammer their servers. Use polite delays between requests.

- Personal Data: Be extremely careful when scraping PII (Personally Identifiable Information), especially from social platforms (GDPR/CCPA compliance).

For a deeper dive, read our guide on Web Scraping Legality by Country.

Conclusion

The "Most Scraped" list of 2025 reflects the global economy's reliance on real-time data. Whether it's pricing (Amazon), talent (LinkedIn), or assets (Zillow), the companies that win are the ones that have the best data, fastest.

If you are looking to build your own dataset from these sources, you don't need a team of engineers. Modern no-code tools place this power in the hands of analysts and founders.

Ready to start? Install Lection today and turn the web into your database.